This Blog gives you the generation of the OSH (Orchestrate® Shell Script) script, and

The execution flow of IBM InfoSphere DataStage using the Information

Server engine.

and Information about Conductor Node, Section Leader and Player in datastage job.

OSH script :

The IBM InfoSphere DataStage and

QualityStage Designer client creates IBM InfoSphere DataStage jobs that are

compiled into parallel job flows, and reusable components that execute on the

parallel Information Server engine. It allows you to use familiar graphical

point-and-click techniques to develop job flows for extracting, cleansing,

transforming, integrating, and loading data into target files,

target systems, or packaged applications.

The Designer generates all the code.

It generates the OSH (Orchestrate Shell Script) and C++ code for

any Transformer stages used.

Briefly, the Designer performs the

following tasks:

1.Validates

link requirements, mandatory stage options, transformer logic, etc.

2.Generates OSH representation of data flows and stages

(representations of framework “operators”).

3.Generates transform code for each Transformer stage which is

then compiled into C++ and then to corresponding native operators.

4.Reusable

BuildOp stages can be compiled using the Designer GUI or from the command line.

Here is a brief primer on the OSH:

1.

Comment blocks introduce each operator, the order of which is determined by the

order stages were added to the canvas.

2.OSH uses the familiar syntax of the UNIX shell. such as Operator

name, schema, operator options (“-name value” format), input (indicated by

n< where n is the input#), and output (indicated by the n> where n is the

output #).

3. For every operator, input and/or output data sets are numbered

sequentially starting from zero.

4.

Virtual data sets (in memory native representation of data links) are generated

to connect operators.

Note: The actual

execution order of operators is dictated by input/output designators, and not

by their placement on the diagram. The data sets connect the OSH operators.

These are “virtual data sets”, that is, in memory data flows. Link names are

used in data set names — it is therefore good practice to give the links

meaningful names.

Framework (Information Server Engine)

terms and DataStage terms have equivalency. The GUI frequently uses terms from

both paradigms. Runtime messages use framework terminology because the

framework engine is where execution occurs. The following list shows the

equivalency between framework and

DataStage terms:

1.

Schema corresponds to table definition

2.

Property corresponds to format

3. Type corresponds to SQL type and

length

4.

Virtual data set corresponds to link

5. Record/field corresponds to row/column

6. Operator corresponds to stage

7. Step, flow, OSH command correspond to a job

8. Framework corresponds to Information Server

Engine

Execution

flow

When you execute a job, the generated

OSH and contents of the configuration file ($APT_CONFIG_FILE) is used to

compose a “score”. This is similar to a SQL query optimization plan. At

runtime, IBM InfoSphere DataStage identifies the degree of parallelism and node

assignments for each operator, and inserts sorts and partitioners as needed to

ensure correct results. It also defines the connection topology (virtual data

sets/links) between adjacent operators/stages, and inserts buffer operators to

prevent deadlocks (for example, in fork-joins). It also defines the number of actual

OS processes. Multiple operators/stages are combined within a single OS process

as appropriate, to improve performance and optimize resource requirements.

The job score is used to fork

processes with communication interconnects for data, message and control3.

Processing begins after the job score and processes are created. Job processing

ends when either the last row of data is processed by the final operator, a

fatal error is encountered by any operator, or the job is halted by DataStage

Job Control or human intervention such as DataStage Director STOP.

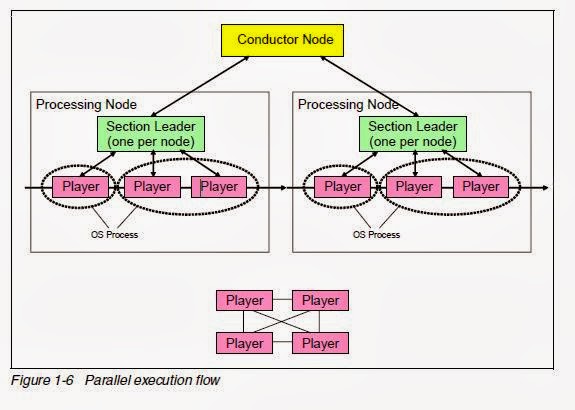

Job scores are divided into two

sections — data sets (partitioning and collecting) and operators (node/operator

mapping). Both sections identify sequential or parallel processing. The

execution (orchestra) manages control and message flow across processes and

consists of the conductor node and one or more processing nodes as shown in

Figure 1-6. Actual data flows from player to player — the conductor and section

leader are only used to control process execution through control and

message channels.

Conductor

is

the initial framework process. It creates the Section Leader (SL) processes

(one per node), consolidates messages to the DataStage log, and manages orderly

shutdown. The Conductor node has the start-up process. The Conductor also

communicates with the players.

Note:

1. Set

$APT_STARTUP_STATUS to show each step of the job startup, and $APT_PM_SHOW_PIDS

to show process IDs in the DataStage

log.

2. You can direct the score to a job log

by setting $APT_DUMP_SCORE.To identify the Score dump, look for “main program:

This step....”.

Section

Leader is a process that forks player processes (one per stage) and manages

up/down communications. SLs communicate between the conductor and player

processes only. For a given parallel configuration file,one section leader will

be started for each logical node.

Players are the

actual processes associated with the stages. It sends stderr and stdout to the

SL, establishes connections to other players for data flow, and cleans up on

completion. Each player has to be able to communicate with every other player.

There are separate communication channels

(pathways) for control, errors,

messages and data. The data channel does not go through the section

leader/conductor as this would limit scalability. Data flows directly from

upstream operator to downstream operator.

No comments:

Post a Comment