A. Partitioning mechanism

divides a portion of data into smaller segments, which is then processed

independently by each node in parallel. It helps make a benefit of parallel

architectures like SMP, MPP, Grid computing and Clusters.

1.

Keyless partitioning

Keyless partitioning

methods distribute rows without examining the contents of the data.

Keyless Partition Method Description

Same : Retains existing partitioning

from previous stage.

Round robin : Distributes rows evenly

across partitions, in a round-robin partition assignment.

Random: Distributes rows evenly across partitions in a

random partition assignment.

Entire

Each partition receives the entire dataset.

2.

Keyed partitioning

Keyed partitioning

examines the data values in one or more key columns,ensuring that records with

the same values in those key columns are assigned to the same partition. Keyed

partitioning is used when business rules (for example, Remove Duplicates) or

stage requirements (for example, Join) require

processing on groups of

related records.

Keyed partitioning

Hash: Assigns rows with the same

values in one or more key columns to the Same partition using an internal hashing algorithm.

Modulus : Assigns rows with the same

values in a single integer key column to the same partition using a simple

modulus calculation.

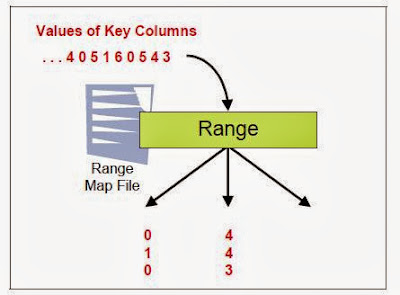

Range : Assigns rows with the same

values in one or more key columns to the same partition using a specified range

map generated by pre-reading the dataset.

DB2 : For DB2 Enterprise Server Edition with DPF

(DB2/UDB) only Matches the internal partitioning of the specified source or

target table.

Data Partitioning Methods :

Datastage supports a few types of Data partitioning

methods which can be implemented in parallel stages:

1.

Auto- Default

partitioning method

It chooses the best partitioning method

depending on;The mode of execution of the current stage and the preceding stage.The

number of nodes available in the configuration file.Datastage

Enterprise Edition decides between using Same or Round Robin partitioning.

Typically Same partitioning is used between two parallel stages and round robin

is used between a sequential and an EE stage.

2.

DB2: – Rarely used partitioning method

Partitions an input dataset in the same way that DB2 would partition it.

i.e if this method is used to partition an input dataset containing information for an existing DB2 table, records are assigned to the processing node containing the corresponding DB2 record. Then during the execution of the parallel operator, both the input record and the DB2 table record are local to the processing node.

Partitions an input dataset in the same way that DB2 would partition it.

i.e if this method is used to partition an input dataset containing information for an existing DB2 table, records are assigned to the processing node containing the corresponding DB2 record. Then during the execution of the parallel operator, both the input record and the DB2 table record are local to the processing node.

3. Entire: Less frequent used

partitioning method

Every node receives the complete set of input data i.e., form the above example, all the records are sent to all four nodes.We mostly use this partitioning method with stages that create lookup tables from their input. all rows from a dataset are distributed to each partition. Duplicated rows are stored and the data volume is significantly increased.

Every node receives the complete set of input data i.e., form the above example, all the records are sent to all four nodes.We mostly use this partitioning method with stages that create lookup tables from their input. all rows from a dataset are distributed to each partition. Duplicated rows are stored and the data volume is significantly increased.

4. Hash: Frequently

used partitioning method

Rows with same key column (or multiple columns) go

to the same partition. Hash is very often used and sometimes improves

performance, however it is important to have in mind that hash partitioning

does not guarantee load balance and misuse may lead to skew data and poor

performance. Once we select this partition we

are also required to select the key column. Based on the key column values,

data is sent to the available nodes, i.e., records/rows with the same value for

the defined key field will go the same processing node.

Note: When

Hash Partitioning, hashing keys that create a large number of partitions should

be selected.

Reason:

For example, if you hash partition a dataset based on a zip code field, where a

large percentage of records are from one or two zip codes, it can lead to

bottlenecks because some nodes are required to process more records than other

nodes.

5. Modulus: Frequently

used partitioning method

Performs the same functionality as the Hash

partition but key field(s) in modulus partition can only be a numeric field.

The Modulus of the numeric field is calculated and partitioning is done based

on that value.

partition = MOD (key_value / number of partitions)

Because modulus

partitioning is simpler and faster than hash, it must be used if you have a

single integer key column. Modulus partitioning cannot be used for composite

keys, or for a non-integer key column.

6. Random: Less

frequent used partitioning method

Records

are randomly distributed across all processing nodes.Like round robin,

random partitioning can rebalance the partitions of an input data set to

guarantee that each processing node receives an approximately equal-sized

partition. The random partitioning has a slightly higher overhead than

round robin because of the extra processing required to calculate a random

value for each record.

7. Range: –

Rarely used partitioning method

It divides a dataset into approximately equal-sized partitions, each of which contains records with key columns within a specific range. It guarantees that all records with same partitioning key values are assigned to the same partition. An expensive refinement to hash partitioning. It is imilar to hash but partition mapping is user-determined and partitions are ordered. Rows are distributed according to the values in one or more key fields, using a range map (the 'Write Range Map' stage needs to be used to create it). Range partitioning requires processing the data twice which makes it hard to find a reason for using it.

It divides a dataset into approximately equal-sized partitions, each of which contains records with key columns within a specific range. It guarantees that all records with same partitioning key values are assigned to the same partition. An expensive refinement to hash partitioning. It is imilar to hash but partition mapping is user-determined and partitions are ordered. Rows are distributed according to the values in one or more key fields, using a range map (the 'Write Range Map' stage needs to be used to create it). Range partitioning requires processing the data twice which makes it hard to find a reason for using it.

Note: In order to use a Range partitioner, a range

map has to be made using the ‘Write range map’ stage.

8. Round robin: – Frequently used partitioning method

The first record goes to the first processing node, the second to the second processing node, and so on. When DataStage reaches the last processing node in the system, it starts over.

This method is useful for resizing partitions of an input data set that are not equal in size.

The round robin method always creates approximately equal-sized partitions. This method is the one normally used when DataStage initially partitions data.

The first record goes to the first processing node, the second to the second processing node, and so on. When DataStage reaches the last processing node in the system, it starts over.

This method is useful for resizing partitions of an input data set that are not equal in size.

The round robin method always creates approximately equal-sized partitions. This method is the one normally used when DataStage initially partitions data.

This partitioning method guarantees an exact load

balance (the same number of rows processed) between nodes and is very fast.

9. Same: – Frequently used partitioning method

In this partitioning method, records stay on the same processing node as they were in the previous stage; that is, they are not redistributed. Same is the fastest partitioning method.

This is normally the method DataStage uses when passing data between stages in your job(when using “Auto partition”).

Note – It implements the same Partitioning method that is used in the previous stage.

In this partitioning method, records stay on the same processing node as they were in the previous stage; that is, they are not redistributed. Same is the fastest partitioning method.

This is normally the method DataStage uses when passing data between stages in your job(when using “Auto partition”).

Note – It implements the same Partitioning method that is used in the previous stage.

B.

Collecting is the opposite of partitioning

and can be defined as a process of bringing back data partitions into a single

sequential stream (one data partition).

Data

collecting methods

A collector combines partitions into a single

sequential stream.

Datastage EE supports the following collecting

algorithms:

1.

Auto- the default algorithm reads rows from a partition

as soon as they are ready. This may lead to producing different row orders in

different runs with identical data. The execution is non-deterministic.

2.

Round Robin- picks rows from input partition patiently, for

instance: first row from partition 0, next from partition 1, even if other

partitions can produce rows faster than partition 1.

3.

Ordered- reads all rows from first partition, then second

partition, then third and so on.

4.

Sort Merge- produces a globally sorted sequential stream from

within partition sorted rows. Sort Merge produces a non-deterministic on

un-keyed columns sorted sequential stream using the following algorithm: always

pick the partition that produces the row with the smallest key value.

No comments:

Post a Comment