Datastage Jobs Best Practices and Performance Tuning

This Blog give you a complete details, how we can improve the performance of datastage Parallel jobs. Best practices we have to follow, while creating the datastage jobs.

This Blog will help you on following topics.

Refer This link as well : Parallel Job Performance Tuning Tips1

This Blog give you a complete details, how we can improve the performance of datastage Parallel jobs. Best practices we have to follow, while creating the datastage jobs.

This Blog will help you on following topics.

1.

Performance

Tuning Guidelines

1.1 General

Job Design

1.2 Transformer

Stage

1.3 Data

grouping Stages

1.4 ODBC

Stages

1.0

Performance Tuning Guidelines

1.1

General Job Design

Ø

Jobs

need to be developed using the modular development approach. Large jobs can be

broken down in to smaller modules, which help in improving the performance.

Ø

In

scenarios where same data (huge number of records) is to be shared among more

than one jobs in the same project, use dataset stage approach instead of

re-reading the same data again.

Ø

Eliminate

unused columns

Ø

Eliminate

unused references

Ø

If

the input file has huge number of records and the business logic allows

splitting up of the data, then run the job in parallel to have a significant

improvement in the performance

1.2

Transformer stage

Ø

Use

parallel transformer stage instead of filter/switch stages ( filter/switch

stages will take more resources for execution. For egs: in the case of filter

stage the were clause will get executed during run time, thus creating the

requirement for more resources, there by decaying the job performance)

Figure:

Example of using a Transformer stage instead of using a filter stage. The

filter condition is given in the constraint section of the transformer stage

properties.

Ø

Use

BuildOp stage only when the required logic cannot be implemented using the

parallel transformer stage.

Ø

Avoid

calling routines in derivations in the transformer stage. Implement the logic

in derivation. This will avoid the over head of procedure call

Ø

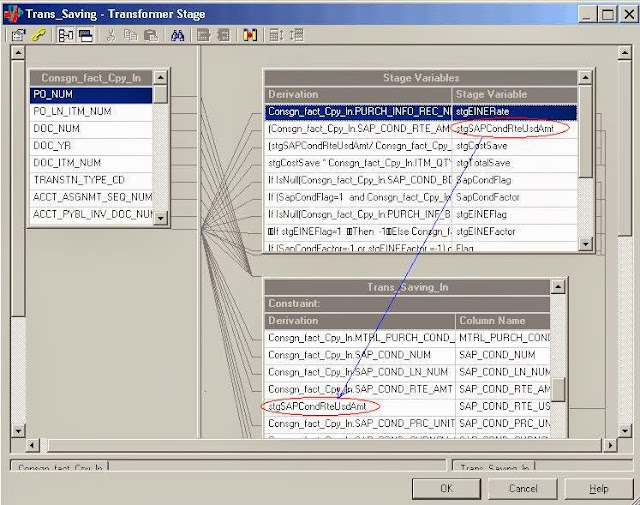

Implement

the logic using stage variables and call these stage variables in the

derivations. During processing the execution starts with stage variables then

constraints and then to individual columns. If ever there is a prerequisite formulae

which can be used by both constraints and also individual columns then we can

define it in stage variables so that it can be processed once and can be used

by multiple records. If ever we require the formulae to be modified for each

and every row then it is advisable to place in code in record level than stage

variable level

Figure:

Example for using stage variables in and using it in the derivations.

1.3 Data grouping stages

Ø

When dealing with stages like

Aggregator, Filter etc, always try to use sorted data for better performance

Figure: Sorting the input data on

the grouping keys in an aggregator stage

The example shown in the figure is

the properties window for an aggregator stage that finds out the sum of a

quantity column by grouping on the columns shown above. In such scenarios, we

will do sorting of the input data on the same columns so that the records with

same/similar values for these grouping columns will come together there by

increasing the performance. Also note that if we are using more than one node,

then the input dataset should be properly partitioned so that the similar

records will be available in the same node.

1.4

ODBC Stages

Ø

If

possible sort the data in ODBC stage itself; this will reduce the over head of

DS sorting the data. Don’t use the sort stage when we have ORDER BY clause in

ODBC sql

Ø

Select

only the required records or Remove the unwanted rows as early, so that the job

need not deal with unnecessary records causing performance degrade

Ø

Using

a constraint to filter a record is much slower as compared to having a SELECT….WHERE

in ODBC stage. User the power of

database where ever possible and reduce the over head for DS.

Figure:

Using the User-defined SQL option in ODBC stages to reduce the overhead of

datastage by specifying the WHERE and ORDER BY clause in the SQL used to get

data.

Avoid

using “like “ operator in user

Ø

defined queries in ODBC stages. But

one thing to be noted here is that , if our custom sql requires a must scenario

like it is doing a filter on some string pattern, we will be forced to use the

like pattern to get the requirement done.

Avoid

using

Ø

Stored Proceedures until and unless

the functionality cannot be implemented in Data Stage jobs.

No comments:

Post a Comment